Collecting and archiving tweets: a DataPool case study

Information presented to a user via Twitter is variously called a ‘stream’, that is, a constant flow of data passing the viewer or reader. Where the totality of information passing through Twitter at any moment is considered, the flow is often referred to as a ‘firehose’, in other words, a gushing torrent of information. Blink and you’ve missed it. But does this information have only momentary value or relevance? Is there additional value in collecting, storing and preserving these data?

A short report from the DataPool Project describes a small case study in archiving collected tweets by, and about, DataPool. It explains the constraints imposed by Twitter on the use of such collections, describes how a service for collections evolved within these constraints, and illustrates the practical issues and choices that resulted in an archived collection.

An EPrints application called Tweepository collects and presents tweets based on a user’s specified search term over a specified period of time (Figure 1). DataPool and researchers associated with the project were among early users of Tweepository using the app installed on a test repository. Collections were based on the project’s Twitter user name, other user names, and selected hashtags, from conferences or other events.

A dedicated institutional Tweepository was launched at the University of Southampton in late 2012. A packager tool enabled the ongoing test collections to be transferred to the supported Southampton Tweepository without a known break in service or collection.

For completeness as an exemplar data case study, given that institutional services such as Tweepository are as yet unavailable elsewhere, tweet collections were archived towards the end of the DataPool Project in March 2013. We used the provided export functions to create a packaged version of selected, completed collections for transfer to another repository at the university, ePrints Soton.

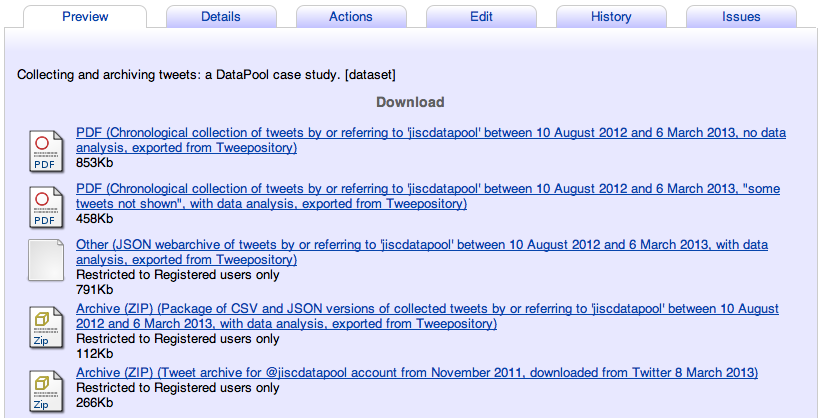

Attached to our archived tweet collections in ePrints Soton (see Figure 2) are:

- Reviewable PDF of the original Tweepository Web view (with some “tweets not shown…”)

- Reviewable PDF of complete tweet collection without data analysis, from HTML export format

- JSON Tweetstream* saved using the provided export tool

- Zip file* from the Packager tool

* reviewable only by the creator of the record or a repository administrator

Figure 2. File-level management in ePrints Soton, showing the series of files archived from Tweepository and Twitter

We have since added the zip archive of the Project’s Twitter account, downloaded directly from Twitter, spanning the whole period from opening the account in November 2011. This service only applies to the archive of a registered Twitter user, not the general search collections possible with Tweepository.

What value the data in these collections and archival versions will prove to have will be measured through reuse by other researchers, and remains an open question, as it does for most research data entering the nascent services at institutions such as the University of Southampton.

Archiving tweets is a first step; realising the value of the data is a whole new challenge.

For more on this DataPool case study see the full report.